Hi again! Wade Walker here: engineer, would-be scientist, and second-time tutorializer.

I return as well! Dashing being-about-town and nascent heading-writer “Interstitius”, at your service

What can I say—he brings a unique sensibility to his work. Anyway, in our last tutorial, me and Stish showed how to create the skeleton of a scientific app using Eclipse RCP and Java OpenGL (JOGL, which I like to pronounce “joggle”).

This time, I’ll show how to speed up rendering by using OpenGL’s “vertex buffer objects” instead of glBegin and glEnd like we did last time. I’ll also keep evolving the tutorial towards a “real” scientific app by making the data source more like scientific data.

Science, ho!

Formerly our method of drawing was easy, but slow

This is how you draw one quadrilateral (what we call a “square”, “rectangle”, “trapezoid”, or “rhombus” in English, except that the last two are technically Greek) in old-style OpenGL:

glBegin( GL_QUADS );

glVertex3f( -1.0f, 1.0f, 0.0f ); // top left

glVertex3f( 1.0f, 1.0f, 0.0f ); // top right

glVertex3f( 1.0f,-1.0f, 0.0f ); // bottom right

glVertex3f( -1.0f,-1.0f, 0.0f ); // bottom left

glEnd();

Perfectly straightforward – it draws one quad, which has four vertices, as quads often do. Easy to understand, easy to write. So why not do everything like this?

In a somewhat predictable inversion, our new method is difficult, but fast

Here’s why we can’t: in a real app, you’ve got a huge mess of stuff between glBegin and glEnd, and the OpenGL driver will struggle to optimize it into something efficient that it can send to the graphics card. Sometimes this works OK, but other times the driver can’t handle it well, and the result is something that’s correct, but slow.

Here’s how we fix it. We create a vertex buffer object, which is just a big chunk of memory that holds the objects we want to draw. Then we send it to our graphics card all at once, where it gets drawn super-fast by dedicated hardware.

The new hotness of vertex buffer objects makes you do all the hard work of setting up your object data in the exact format that the graphics card can process the fastest. So at the price of a few extra drops of programmer brain-juice, your users get faster graphics. And everyone’s happy!

I won’t show an example yet, because it’s too ugly to put first thing in the tutorial. Just hold on to that dream of gloriously fast graphics while we set up this new project.

Importing all of one’s projects at a stroke

This time instead of crawling through the tutorial one file at a time, I zipped up all the projects so you can slurp them into Eclipse all at once.

Download this file and this file, rename them so they end with “.zip” instead of “-zip-not-a.doc”, and unzip them inside an empty folder that you’ll use as a new Eclipse workspace. Then start Eclipse, select “File > Switch Workspace > Other…”, browse to your new workspace folder, and click “OK”. If you see the welcome screen, click the “Workbench” button on the right to go past it.

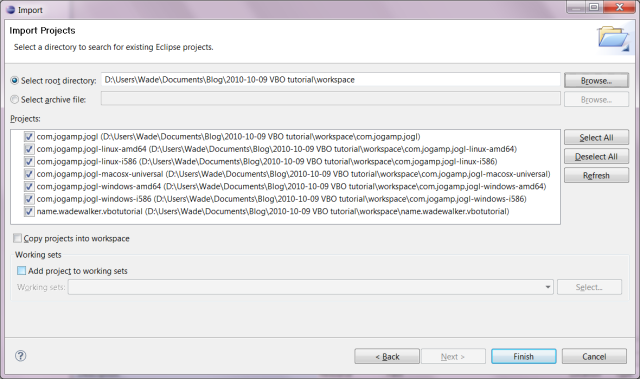

Then select “File > Import…” from the main menu, select “Existing Projects into Workspace”, and click “Next”.

Click “Browse…”, navigate to your workspace folder, and click “OK”. Then you should see these seven projects. Click “Finish” to import them into your workspace.

The word “import” is a little misleading here, since the projects were already in your workspace folder. What happened just then was Eclipse wrote into its “.metadata” folder inside the workspace folder, to tell itself which projects are in the workbench. The next time you open Eclipse, you should see the same projects again.

A rather unsporting leap forward to reveal this tutorial’s outcome

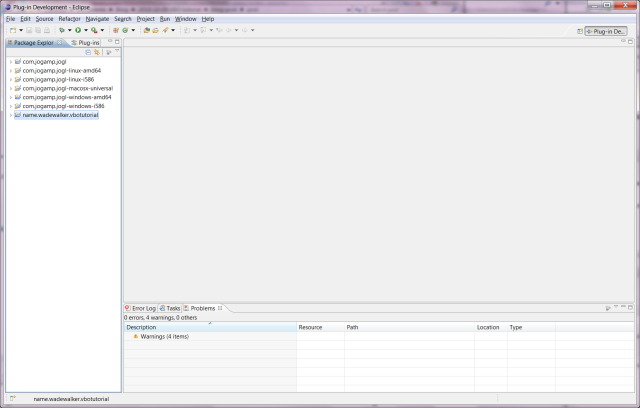

Let’s run the tutorial first to see what it does, then I’ll go back and explain it. After importing the projects, you should be seeing this:

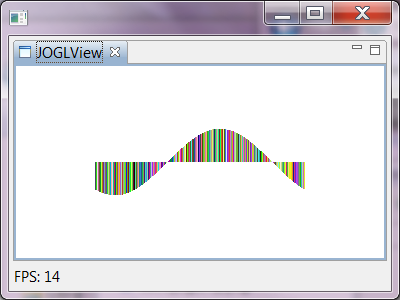

Now right-click on “name.wadewalker.vbotutorial” in the “Package Explorer” view on the left, then select “Run As > Eclipse Application.” Now bask in the glory of vertex buffer objects! Yes, bask!

Perhaps a bit of explanation is needed for this rather uninspiring image?

I’m on it. This is supposed to approximate what a scientific visualization program might do. The sine wave on screen is made of 300,000 skinny vertical quads of different colors. The quads are stationary, but they’re constantly changing size to make the sine wave look like it’s moving. The “frames per second” (FPS) counter in the bottom left shows you how fast your system can render this demo. The code that creates this data is in DataSource.java.

Before an actual scientist VR-stabs me over the internet, let me say that of course no scientist would need to visualize something so simple. Because they’re all über-geniuses! But this demo is to show us how to program, not how to drop science. The point is that we have a source of data that’s constantly changing, and we have to throw it up on screen as it evolves.

So how does it work?

Creating a vertex buffer object ex nihilo

Technically it’s not created from nothing, it’s created from electrons, smarty-shorts. To see how, open up the “name.wadewalker.vbotutorial” project in the Package Explorer on the left, then drill down to “src/name.wadewalker.vbotutorial”, double-click on “JOGLView.java”, and scroll to this part.

//================================================================

/**

* Creates vertex buffer object used to store vertices and colors

* (if it doesn't exist). Fills the object with the latest

* vertices and colors from the data store.

*

* @param gl2 GL object used to access all GL functions.

* @return the number of vertices in each of the buffers.

*/

protected int [] createAndFillVertexBuffer( GL2 gl2, List<DataObject> listDataObjects ) {

int [] aiNumOfVertices = new int [] {listDataObjects.size() * 4};

// create vertex buffer object if needed

if( aiVertexBufferIndices[0] == -1 ) {

// check for VBO support

if( !gl2.isFunctionAvailable( "glGenBuffers" )

|| !gl2.isFunctionAvailable( "glBindBuffer" )

|| !gl2.isFunctionAvailable( "glBufferData" )

|| !gl2.isFunctionAvailable( "glDeleteBuffers" ) ) {

Activator.openError( "Error", "Vertex buffer objects not supported." );

}

gl2.glGenBuffers( 1, aiVertexBufferIndices, 0 );

// create vertex buffer data store without initial copy

gl2.glBindBuffer( GL.GL_ARRAY_BUFFER, aiVertexBufferIndices[0] );

gl2.glBufferData( GL.GL_ARRAY_BUFFER,

aiNumOfVertices[0] * 3 * Buffers.SIZEOF_FLOAT * 2,

null,

GL2.GL_DYNAMIC_DRAW );

}

// map the buffer and write vertex and color data directly into it

gl2.glBindBuffer( GL.GL_ARRAY_BUFFER, aiVertexBufferIndices[0] );

ByteBuffer bytebuffer = gl2.glMapBuffer( GL.GL_ARRAY_BUFFER, GL2.GL_WRITE_ONLY );

FloatBuffer floatbuffer = bytebuffer.order( ByteOrder.nativeOrder() ).asFloatBuffer();

for( DataObject dataobject : listDataObjects )

storeVerticesAndColors( floatbuffer, dataobject );

gl2.glUnmapBuffer( GL.GL_ARRAY_BUFFER );

return( aiNumOfVertices );

}

First we create a vertex buffer object with glGenBuffers, then do a glBufferData to allocate the size we want. Every vertex needs three floats for the position (x, y, z), and three more for the color (r, g, b). Then we use glMapBuffer to get an object we can write into, we write all our floats into it, then we call glUnmapBuffer to finish.

Might you explain why both colors and vertices are placed into a so-called “vertex buffer”?

Aha, good point! It’s true that in math, a “vertex” is really just a point where lines meet, but in computer graphics we can also consider the color to be a part of the vertex. There can be more stuff in a vertex too, like the normal vector, texture coordinates, and other things we don’t need yet. So “vertex buffer” isn’t really a misnomer.

Why, then, do we interdigitate the positions and colors? Could we not write all of one, then all of the other?

Alternating positions and colors in a single vertex buffer speeds things up by preserving data locality. When you write the data into the buffer, you don’t want to be writing to two widely separated addresses, because your computer’s memory system isn’t good at that.

Similarly, if the graphics card has to fetch the positions from one address in memory and the colors from a very different address to render each triangle, it’s gonna be slower than if all the data for that triangle is close together.

I originally used separate position and color buffers for this tutorial, and when I switched to one “interdigitated” buffer, I got a 5% to 10% increase in frame rate.

Rendering one’s vertex buffer

Here’s the code to draw the vertex buffer we just created, also in JOGLView.java:

// needed so material for quads will be set from color map gl2.glColorMaterial( GL.GL_FRONT_AND_BACK, GL2.GL_AMBIENT_AND_DIFFUSE ); gl2.glEnable( GL2.GL_COLOR_MATERIAL ); // draw all quads in vertex buffer gl2.glBindBuffer( GL.GL_ARRAY_BUFFER, aiVertexBufferIndices[0] ); gl2.glEnableClientState( GL2.GL_VERTEX_ARRAY ); gl2.glEnableClientState( GL2.GL_COLOR_ARRAY ); gl2.glVertexPointer( 3, GL.GL_FLOAT, 6 * Buffers.SIZEOF_FLOAT, 0 ); gl2.glColorPointer( 3, GL.GL_FLOAT, 6 * Buffers.SIZEOF_FLOAT, 3 * Buffers.SIZEOF_FLOAT ); gl2.glPolygonMode( GL.GL_FRONT, GL2.GL_FILL ); gl2.glDrawArrays( GL2.GL_QUADS, 0, aiNumOfVertices[0] ); // disable arrays once we're done gl2.glBindBuffer( GL.GL_ARRAY_BUFFER, 0 ); gl2.glDisableClientState( GL2.GL_VERTEX_ARRAY ); gl2.glDisableClientState( GL2.GL_COLOR_ARRAY ); gl2.glDisable( GL2.GL_COLOR_MATERIAL ); glcanvas.swapBuffers();

We use glVertexPointer and glColorPointer to set the starting positions in the buffer, with the first color pointer three floats after the first position. Then we tell the graphics card that each new position and color is six floats past the current one. We call that a “stride” of six floats, which is 24 bytes. Then finally we draw the whole buffer full of quads with just one command, glDrawArrays.

What, then, is the benefit of all our labors?

I did this tutorial with glBegin/glEnd first, as a test. When I switched to a vertex buffer, the frame rate increased by 100%.

And this is a worst-case scenario, too. In many apps, most of the scene will be constant from one frame to the next, so we can just move the viewpoint and call glDrawArrays again without changing the vertex buffer at all.

As a test, I changed the tutorial code to only write the vertex buffer once, and the frame rate jumped from 14 to 25. So vertex buffers are almost 200% faster than glBegin/glEnd for this more common situation.

Might we run faster still?

There are 600K triangles here (2 per quad), and at 14 FPS, that’s 8.4 million triangles per second. With the vertex buffer written only once, we get 25 FPS, or 15M tris/sec.

I downloaded the NVIDIA performance tools and profiled a couple of C++ DirectX demos that come with them. One got 5.7M tris/sec, and one got 8.4M tris/sec. They had texturing and other effects turned on, so their rendering isn’t as simple as ours, but their triangle rates seem comparable.

But! When I look at the theoretical maximum speed, things aren’t so clear. My graphics card is an NVIDIA GeForce 8800 GTX, which can supposedly do 250M tris/sec on the 3DMark06 benchmark. That would give 417 FPS on our tutorial! Why is 3DMark06 16 times faster than us?

A few thought-experiments as to how our speed could be increased

Part of it may be the geometrical primitives 3DMark06 is rendering. We’re rendering quads, which have four vertices for two triangles, which means two vertices per triangle. If we jumped ahead in the OpenGL playbook and used triangle strips instead, we could get that down to one vertex per triangle, which could double our frame rate.

But we need to double our rate three more times to get to that theoretical max. If we removed the color information and just drew all the triangles in the same color, we could halve the size of our vertices and maybe double the speed again.

But how do we double it two more times? No clue.

Maybe changing the vertex buffer every frame is saturating the bus between my computer and the video card? Let’s check.

My video card is designed for an x16 PCI Express 1.x slot, which is rated at 4GB/sec. In this tutorial, each vertex buffer is (300,000 quads) * (4 verts/quad) * (6 floats/vert) * (4 bytes/float) = 28,800,000 bytes. At 14 FPS, that’s 403,000,000 bytes/sec, which is only about 10% of our bus capacity. So that can’t be the problem.

Hmm, I’m out of ideas for the moment. But handing back my super-genius credentials isn’t an option, so I’ll look into this and cover it in a future tutorial.

Resources which the reader may find helpful

OpenGL tutorials: Check out http://nehe.gamedev.net/. These go over OpenGL more slowly and thoroughly than I do, and are recommended.

The OpenGL Programming Guide, nth edition: You can buy it for about $50 on Amazon. It’s definitive, but not exactly a tutorial. The style of OpenGL I’ve used so far is actually 1.5 to 2.0, not the latest 4.1 stuff, so make sure to get a book about the version you want.

Basic documentation: At http://www.opengl.org/ you can find docs for the C API calls. The ones in JOGL are Java-fied, but you can usually tell how to translate one to the other.

A list of the revisions which we have made to this document

Pingback: Tutorial: Creating native binary executables for multi-platform Java apps with OpenGL and Eclipse RCP | Wade Walker's blog

Hi there,

First, your notes are excelent, I realy enjoy 😀

I have a question, using textures has the same benefits that vertex buffer? lets say we have hundreds of images to make a volume rendering, I was doing it with 3d text, is better to do so with vertex buffer?

Thanks!

Hi Leandro,

I’m glad you like these tutorials! Let me know if there’s any way I can make them more helpful.

As for your question: in general, the more polygons you have, the more benefit vertex buffers will give you (compared to glBegin/glEnd).

Using vertex buffers might help speed up volumetric rendering from a 3D texture, but it’s hard for me to say without knowing more details. I assume you’re doing something like they describe at http://www.opengl.org/resources/code/samples/advanced/advanced97/notes/node181.html, where you create polygons that are perpendicular to the sight line, then texture them from a 3D texture and render them back-to-front with blending.

If you’re only creating 10 or 20 slices through your 3D texture, that might not be enough polygons to really benefit from vertex buffers. But you might still want to try it and see, since performance depends on the details of the drivers and the video hardware.

Thats it, now I get you’re point. The planes are rectangles, so the number of vertex is usually low; anyway maybe I’ll do some tests

Pingback: Tutorial: Displaying Java OpenGL in an Eclipse editor with a menu bar and a run/pause button | Wade Walker's blog

The reason you can’t get to the max is because you’re using Java (Not complaining, just explaining). When Java needs to make an API call to OpenGL (Same story for .Net languages) it has to run through the JNI (Java Native Interface) which causes some slowdown. .NET Applications go through a similar process called “thunking”

Most of these benchmarks are run in such a way that there is 0 bus traffic (To eliminate any waiting) and run with “ideal” triangles (Ones which raster and plot well)

Check your cull settings too… I know that turing cull off (When it’s not actually culling anything) can give a slight speedup.

Great tutorial BTW!

Hi James,

Glad you liked the tutorial! But I gotta say, I think your explanation for my inability to reach the max polygon rate is wrong 🙂

It’s simple to write a test program to see how many calls per second you can make through JNI to a native library like OpenGL. Here’s one I tried just now:

long lStart = System.currentTimeMillis();

long lAccum = 0;

int iCalls = 1000000;

for( int i = 0; i < iCalls; i++ ) {

String s = gl.glGetString( GL.GL_VERSION );

lAccum += s.charAt( 0 );

}

long lElapsed = System.currentTimeMillis() - lStart;

System.err.printf( "Calls per second: %f\n", ((double)iCalls) / (lElapsed / 1000.0) );

System.err.printf( "Accumulator: %d\n", lAccum );

Here’s the result, on my modest 2.4GHz quad-CPU machine:

Calls per second: 341064.120055

Accumulator: 51000000

The accumulator variable is there just to convince people that the compiler isn’t optimizing away the whole loop (which shouldn’t be possible in this case anyway, since the compiler can’t know if glGetString() has side effects, but I figured I’d be 100% certain).

If you increase

iCallsfurther, you can get call rates up into the millions of calls per second, presumably due to the compiler unrolling the loop more and more aggressively.Since I would only need 417 calls/second to hit the max polygon rate of my video card with my buffer of 600K triangles, I don’t think JNI call overhead can be the problem.

However, you do mention some other good possibilities — perhaps the 3DMark06 triangles are tailored to rasterize quickly, or perhaps they’ve carefully set up the render modes to avoid slow paths in the drivers. I need to go back and revisit this tutorial so I can resolve the question fully.

Personally, my experience is all DirectX (That’s why I’m only just starting with OpenGL tutorials)… However I like to think I can squeeze performance out of things… (I hobby develop for low end computers)

Rereading your figure of 15m prims/s made me wonder how many my XT2 could do. It’s only got a 1.6ghz dual-core and Intel gma graphics… While I used C++ & DirectX for my tests, I’m guessing OpenGL would may be faster (I know little about OpenGL so please correct me if I’m wrong)

I managed to get 18.8M prims/second… Not bad for a laptop with onboard graphics 😛

Raw data…

9,322 prims/frame * 330fps = 3m prims/s

37,842 prims/frame * 150fps = 5.6m prims/s

85,562 prims/frame * 090fps = 7.7m prims/s

152,482 prims/frame * 054fps = 8.2m prims/s

612,162 prims/frame * 022fps = 13.4m prims/s

1,569,282 prims/frame * 016fps = 15.3m prims/s

3,834,402 prims/frame * 004.9fps = 18.78m prims/s = better than a desktop 😛

Looks like clearing/swapping the back buffers was quite expensive… Removing the call bought the first one up to 440fps… (Didn’t test for the others)…

Each test was done with all prims in camera, zoomed to fit the screen & with timers accurate to the microsecond. Index buffers were used to full effect 😀

If you want, I can zip up the source code and you can run it on yours? I’m really eager to see what a desktop could end up with (Perhaps closer to that elusive limit?) 🙂

Where’s the edit button D:

Anyway, was going to add that you’re correct, and looking into the the JNI overhead is only really substantial if you’re not doing much before returning and it’s something that can be done in Java anyway… My bad…

I haven’t done a direct comparison between DirectX and OpenGL, but I would suspect if you’re using large vertex buffers in both cases, the performance should be very similar, since most of the execution time is spent inside the video card driver 🙂

In my case, I know that if I wasn’t refilling the vertex buffer every frame, it would probably run about twice as fast (maybe 30M tris/sec). Most videogames seem to use two vertex buffers so the filling and rendering can run in parallel threads, but my tutorials haven’t quite got to that level of optimization yet.

Then if I switch to triangle strips and use index buffers to reduce data traffic, I’ll bet it would be pretty close to the theoretical limit. I’m leaving all that for the future, though — don’t want to jam too much into one tutorial.

Last night I pushed my laptop up to 22mill. Email me if you write that super-optimised version and I’ll give it a run 😀

Friend of mine ran my example and now I feel bad about my computer. He got 1 billion verts per second.

Wouldn’t you want to use indexed drawing, as well as letting the graphics card do the actual position through a shader?

Right now this is doing all the computation on the CPU rather than using a shader allowing the CPU to offload to the GPU

Hi Jonathan,

You’re right, I might be able to get some performance boost using indexed primitives. I was on the fence about this, since only 25% of my vertices are shared between quads (each quad’s ending vertex is the same as the starting vertex of the next quad). Since indexed primitives require extra bandwidth for the index buffers, I wasn’t sure if the overall effect on frame rate would be positive or negative.

As for doing the computation on the CPU instead of the GPU, that’s intentional 🙂 I’m assuming (for now) that whatever scientific algorithm is being rendered here, it’s something that doesn’t map well onto an OpenCL-style GPGPU programming model. This is the case in my own research, where I do computational fluid dynamics on meshless data — there’s too much branch divergence in the algorithms to profitably port it to OpenCL or put it into an OpenGL vertex shader.

Awesome, I actually am quite new to this, and loved the read. Know this is sort of a dead article since the last update was from April, but great of you to post a reply back so quickly!

I’m teeter tottering between implementing my first “real” opengl project in a language I use on a daily basis at work (java) or C++ which I haven’t used in any real extent since college (albeit that was only 2 years ago, lol)

Any suggestions? Also, I’m still trying to wrap my head around computing perspective and the basic math behind most common things (like perspective or camera, model-space, etc.)

Thanks!

Glad you liked it! I’m actually going to update these tutorials in the next few months, now that JOGL 2 has OpenGL 3+ contexts working on Mac OS X. This will allow me to use a more modern, shader-centric approach.

As to using your daily work language or something else to do OpenGL, I went through this same choice myself. I use Java all day at work, so for a while I figured I’d use C++ (or later C#) for my home projects, so I could learn new stuff and expand my horizons.

Problem is, it was unexpectedly hard for me to hack all day in one environment (Eclipse and Java), then come home and hack all night in a slightly different environment (C++ and DevStudio) without feeling like I was always “cold” in the second one. I was constantly having to re-figure-out tedious stuff that I already knew (like how to create complex, multi-windowed apps), so I finally decided to consolidate and just do all my development in Java.

But you may have a different experience. Some guys thrive on doing multiple things at once 🙂 You can always try it one way, then switch if you don’t like it. The important thing is just getting your project done.

I agree 100% actually, kind of leaning towards java for 2 reasons, familiarity, and maven…don’t know if I can live without Maven at this point, just so easy to add modules. Plus, dll hell has me thrown for a loop half the time, just getting OpenGL up and running in VS is a pain in the butt.

I’d love to see what you’re working on or even try to help contribute (what I can, like I said my knowledge in this area is very limited, I work on web applications as a profession, so if you want a RESTful web service written in Spring, I’m your man, not how to compute millions of triangles on the screen, lol).

Well, if you’re interested in OpenGL programming in Java, we could always use more help on JOGL 2 🙂 If so, just let me know and I’ll email you. You don’t need to be an expert to get started — just using JOGL 2 and reporting any bugs or problems you encounter would be a great help.

Absolutely, always interested in trying out new stuff!

E-mail away!

Great! Thank you!

Hello! You have some really nice tutorials here!

I’ve built an application of my own and have tried exporting it using the next tutorial but something always goes wrong, so I’ve decided to start with this one first and work my way up. 😀

However after I’ve imported everything it does not seem to run properly: http://tinypic.com/r/35lex6p/6

I am using Mountain Lion. Is it possible that they’ve messed something up or am I missing something

Thanks 🙂

Hi Peter,

It could be that you need a more recent build of JOGL since you’re running Mountain Lion. I’ve seen a few recent reports on the JOGL web site (e.g. at http://forum.jogamp.org/Please-help-with-Mountain-Lion-Problem-td4026415.html#a4026444) that say that the most recent build has fixed some Mountain-Lion-related problems. I haven’t tried it myself yet, though.

If you still have this problem with the most recent JOGL, could you submit a bug report at https://jogamp.org/bugzilla/ and let me know here, and I will add myself to the bug report and take a look.

Wade, great tutorial, thanks for posting. I launched a VM monitor after reading some of the other comments about performance. What I noticed is that most of the time seemed to be spent accessing the memory mapped buffer. Is that memory on the video card? If so, then perhaps the best way to optimize is to load the buffer from JOCL?

Hi Kyle,

The vertex buffers are usually stored both in system memory and in video card memory. When you lock a buffer and write to it, you’re writing to the system-memory copy. Then when you unlock the buffer, the Open GL driver copies it back out to the video card for use by subsequent Open GL commands. JOCL drivers work similarly. This holds for today’s desktop computers, but in mobile systems and integrated-GPU desktops, there’s only one memory system, so some of this copying may not be required.

In my case, I’m modifying the buffer every frame, since I’m drawing a mutable object (my example graph). But in most games, there’s lots of stuff drawn every frame that doesn’t change, like buildings and terrain, so the copying is not as extreme.

HELPPPPP MEEE!

I need a tutorial in OpenGL 4 (jogl), do you know where can I take some tips?

Thank you!

You might try https://github.com/elect86/modern-jogl-examples, which apparently is a port of the tutorials from http://www.arcsynthesis.org/gltut/. I’m not sure if it’s specifically OpenGL 4, but it is at least about the programmable pipeline instead of fixed-function. You might also look on http://jogamp.org/wiki/index.php/Jogl_Tutorial, or ask on http://jogamp.org/forum.html. But in general, the more modern the OpenGL feature you want to learn about, the greater chance you’ll just have to read the docs at http://www.opengl.org/sdk/docs/man4/ and just start coding. Good luck!

your archive files complicate everything

why the loads of file instead of one simple java file that only does what this post is about

Yeah, sorry, I wrote this before I had things publicly available on GitHub 🙂 If you look at https://github.com/WadeWalker you can check out the first tutorial in this series as two repositories. For this tutorial, I haven’t made it a separate repo, but you can check out the JOGL code from https://github.com/WadeWalker/com.jogamp.jogl, then use the zip file for just this tutorial. Let me know if you have problems, if so I can always make this tutorial available as a separate GitHub repo.